In the branch of computer science, Data structures, and algorithms are one of the most important concepts. Now to choose what data structure performs best using what algorithm, we need to calculate the time complexity of the code. Time complexity serves as the most essential measure to judge the efficiency of algorithms. It gives an insight into how the algorithm runtime increases for the size of its input as the input size grows. Understanding the time complexities is thus a crucial pre-requisite for designing optimal algorithms that solve for quick and effective processing of large data samples.

Understanding Time Complexity: What is it?

Time complexity is the fundamental measure of time taken by the code to execute. It indicates the number of computational steps or operations a given algorithm performs when it is considered as a function of the input size. It is being used as a duration estimation for algorithms as to how long input sizes take to process. O-notation presents a growth rate of runtime, which is the highest execution-time bound for the algorithm. There are many examples of time complexities like the time complexity of Linear search is O(n), for convex hull problem it is O(nlogn).

Factors Influencing Time Complexity

Several factors contribute to the time complexity of an algorithm:

1. Number of Operations: The time complexity concerns itself with the number of basic operations an algorithm is going to perform. These operations include but are not limited to, comparison, assignment, and arithmetic exercises.

2. Input Size: The increase of data input significantly costs the time complexity. The runtime resources for as the number of operations made by an algorithm rises to linear, quadratic, or exponential growth, the power complexity may be seen.

3. Algorithm Design: Algorithm design methods in algorithm designing, from which data structures, loops to recursion are selected, affect its time complexity. An algorithm works with higher accuracy when it has simple complexity.

Analyzing Time Complexity: Big O notation.

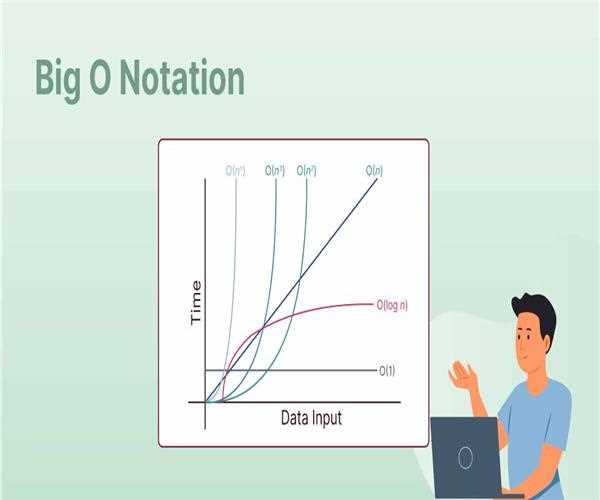

Big O notation is an approach that allows us to define the upper boundary about the running time of the algorithm inside a standardized model. The best possible outcomes of the algorithm are distinguished by considering its runtime, which is dependent on the input’s size. Common time complexities represented in Big O notation include:

1. O(1) - Constant Time Complexity: Algorithms that have constant complexities give a set number of operations whatever the size of the input. Cases like the operation of the index on an array or the simplest arithmetic operations are typical.

2. O(log n) - Logarithmic Time Complexity: The fact that logarithmic time complexity means that the algorithm's runtime grows logarithmically with the size of input can be considered as a definitive property of binary search. The algorithms with bit search or the operations that are done on the trees, mostly demonstrate logarithmic time complexity.

3. O(n) - Linear Time Complexity: The symbol of linear complexity signifies that the runtime of the algorithm goes up linearly along with the input size. Linear time complexity can be seen in a situation where an array is visited once or that a list is done in a single pass.

4. O(n^2) - Quadratic Time Complexity: The runtime of the algorithm is quadratic to the input size. It intends to grow as the square of the size. Apart from nested loops and pairwise comparisons of type like bubble and insertion sort, time complexity is quadratic.

5. O(2^n) - Exponential Time Complexity: An algorithm is said to have exponential time complexity if its runtime is a multiplication of two instruction times each for the next size of input. The growth of brute force algorithms and several recursive algorithms is evidence that exhibits exponential time complexity.

Time Complexity in Practice

Understanding time complexity has practical implications for software development and algorithm optimization:

1. Algorithm Selection: Developers are empowered to determine which algorithms are best suited for a given application based on their time complexity and the scale of the input information. To maximize efficiency, the algorithms with lower time complexities can be the best ones.

2. Performance Optimization: The complexity and time-optimizing procedures may greatly impact the software allowing it to work faster and with no cracks and therefore have a higher scalability.

3. Scalability: The algorithms that perform well also meet the required time complexities and allow for the biggest datasets to be scaled effectively because of which the performance is robust and can be readily deployed in real cases.

Conclusion

Time complexity is the cornerstone in algorithms' analysis and design, which gives the implementers a basis to build up efficiency and scalability. Knowing the time complexity and using methods to analyze and optimize it can help developers develop high-quality algorithms that can tackle complicated computational problems efficiently. Time complexity (from simple routine to complex algorithm development) is a key element of skill for reliability and excellence in programmers.

Leave Comment