category

home / developersection / category

YARN’s Resource Management

Yarn’s Resource ManagerThe most key component of YARN is the Resource Manager, which governs and maintains all the data processing resources in the Ha

Data Replication in Hadoop: Slave node disk failures (Part -2)

In Part -1, I explained about Data Replication in Hadoop: Replicating Data Blocks (Part – 1) now in this post I am trying to explain about Slave node

Writing and Reading Data from HDFS

Writing dataFor creating new files in HDFS, a set of process would have to take place (refer to adjoining figure to see the components involved):1.The

HDFS Federation and High availability

Before Hadoop 2 comes to the picture, Hadoop clusters were living with the fact that Name Node has placed limits on the degree to which they could scale.

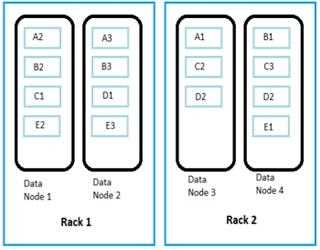

Storing Data in HDFS

Just to be clear, storing data in HDFS is not entirely the same as saving files on your personal computer. In fact, quite a number of differences exis

Hadoop Distributions: Cloudera

We have seen that the Hadoop ecosystem has several component parts, all of which exist as their own Apache projects. Since Hadoop has become extremely

Pros and Cons of Hadoop System

As with any tool, it's important to understand when Hadoop is a good fit for the problem in question. The architecture choices made within Hadoop enab

Graph Analysis with Hadoop

We all are already familiar with log data, relational data, text data, and binary data, but we will soon hear about another form of information: graph data.

Image Classification with Hadoop

Image classification starts with the notion that we build a training set and that computers are equipped to recognize and categorize what they’re processing at.

Social Sentiment Analysis with Hadoop

Social sentiment analysis is simply the most overrated of the Hadoop applications, which should be no surprise, given that we breathe in a world with a constantly connected and expressive population.

Risk Modelling with Hadoop

Risk modelling is another major use case that’s energized by Hadoop. We think we will find that it closely resembles the fraud detection model use case in which it acts like a model-based discipline.

Data Warehousing with Hadoop

Data warehouses are on the edge of the line, trying to cope with growing needs on their finite resources. The sudden growth in the volumes of data set