In this article, we'll unravel the concept of Self-Organizing Maps, exploring their principles, applications, and the underlying mechanisms that make them a powerful tool in the realm of artificial intelligence.

Understanding Self-Organizing Maps (SOM):

Self-Organizing Maps are a type of unsupervised learning neural network developed by Finnish scientist Teuvo Kohonen in the 1980s. Unlike traditional neural networks that rely on supervised learning, SOMs are adept at organizing and visualizing high-dimensional data in a way that preserves the inherent structure of the input space.

Principles of Self-Organizing Maps:

Neuron Organization:

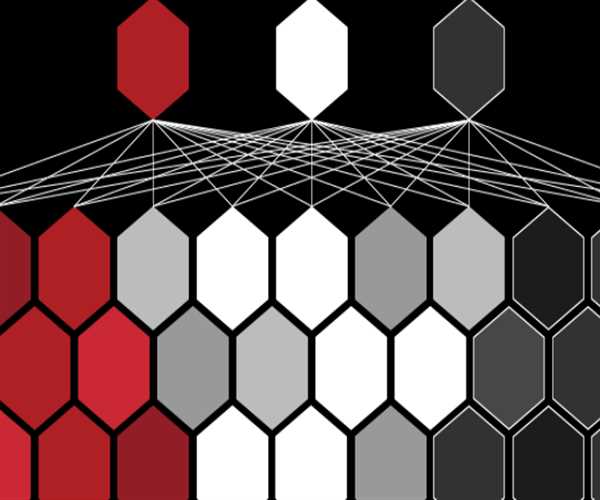

The core principle of SOM lies in its arrangement of neurons in a two-dimensional grid. Each neuron is associated with a weight vector, and the arrangement reflects the topology of the input data.

Competitive Learning:

During the training process, input data are presented to the SOM, and neurons compete to be the most responsive to specific input patterns. The neuron whose weight vector is most similar to the input pattern is the winner, and this winning neuron, along with its neighbors, adjusts its weights.

Topology Preservation:

A key feature of SOMs is their ability to preserve the topology of the input space. Neurons that are close to each other in the grid respond to similar input patterns, ensuring that the spatial relationships in the input data are maintained in the map.

Adaptability and Plasticity:

SOMs are adaptive and exhibit plasticity. As they process more data, their weight vectors adjust to capture the underlying patterns in the input space. This adaptability allows them to organize complex and high-dimensional data efficiently.

Training Process of Self-Organizing Maps:

Initialization:

The weight vectors of the neurons are initialized with random values.

Presentation of Input Data:

Input data are presented to the SOM, and the neuron with the weight vector closest to the input pattern is identified as the winner.

Weight Adjustment:

The winning neuron, along with its neighbors, adjusts its weight vector to become more similar to the input pattern. This step is crucial for learning the structure of the input data.

Applications of Self-Organizing Maps:

Data Visualization:

SOMs are widely used for visualizing high-dimensional data in a lower-dimensional space. They can reveal the inherent structure and relationships within the data, making complex datasets more interpretable.

Clustering and Pattern Recognition:

SOMs excel in clustering similar data together. They are employed in tasks such as pattern recognition, where the network can identify and categorize input patterns based on their similarity.

Feature Extraction:

SOMs are effective in extracting important features from input data. By learning the underlying structure, they can highlight key characteristics that contribute to the organization of the data.

Speech and Image Processing:

In speech and image processing, SOMs are utilized for tasks such as speech recognition and image segmentation. They can organize and recognize complex patterns within these data types.

Market Analysis and Customer Segmentation:

SOMs find applications in market analysis and customer segmentation. They can group similar customers based on their preferences and behaviors, providing valuable insights for targeted marketing strategies.

Advantages of Self-Organizing Maps:

Unsupervised Learning:

SOMs operate on unsupervised learning principles, making them suitable for tasks where labeled data may be scarce or difficult to obtain.

Topology Preservation:

The ability of SOMs to preserve the topology of the input space is a distinct advantage. It allows for meaningful visualization and interpretation of complex data structures.

Robustness:

SOMs exhibit robustness to noise and outliers in the data. They can capture the underlying patterns even in the presence of variations and irregularities.

Challenges and Considerations:

Parameter Sensitivity:

The performance of SOMs is sensitive to parameter choices, such as the learning rate and neighborhood size. Careful tuning is essential for optimal results.

Complexity in Interpretation:

While SOMs provide valuable insights into the structure of data, interpreting the learned representations can be complex, especially in high-dimensional spaces.

Leave Comment